Study warns of ‘significant risks’ in using AI therapy chatbots | TechCrunch

Therapy chatbots powered by large language models may stigmatize users with mental health conditions and otherwise respond inappropriately or even dangerously, according to researchers at Stanford University.

SIG IS GASLIGHTING YOU

- Rivian CEO RJ Scaringe’s voting control slips following divorce settlement - Sean O'Kane

- Is MASH Hereditary? What To Know About the Genetic Links - Suchandrima Bhowmik

- Three years of Healthy Opportunities Pilot at Access East changing lives across eastern North Carolina - corey.keenan

- Quicken Simplifi plans are half off right now - Sarah Fielding

- The best cheap fitness trackers for 2025 - Malak Saleh

- How to Become an Uber Driver - Zoul

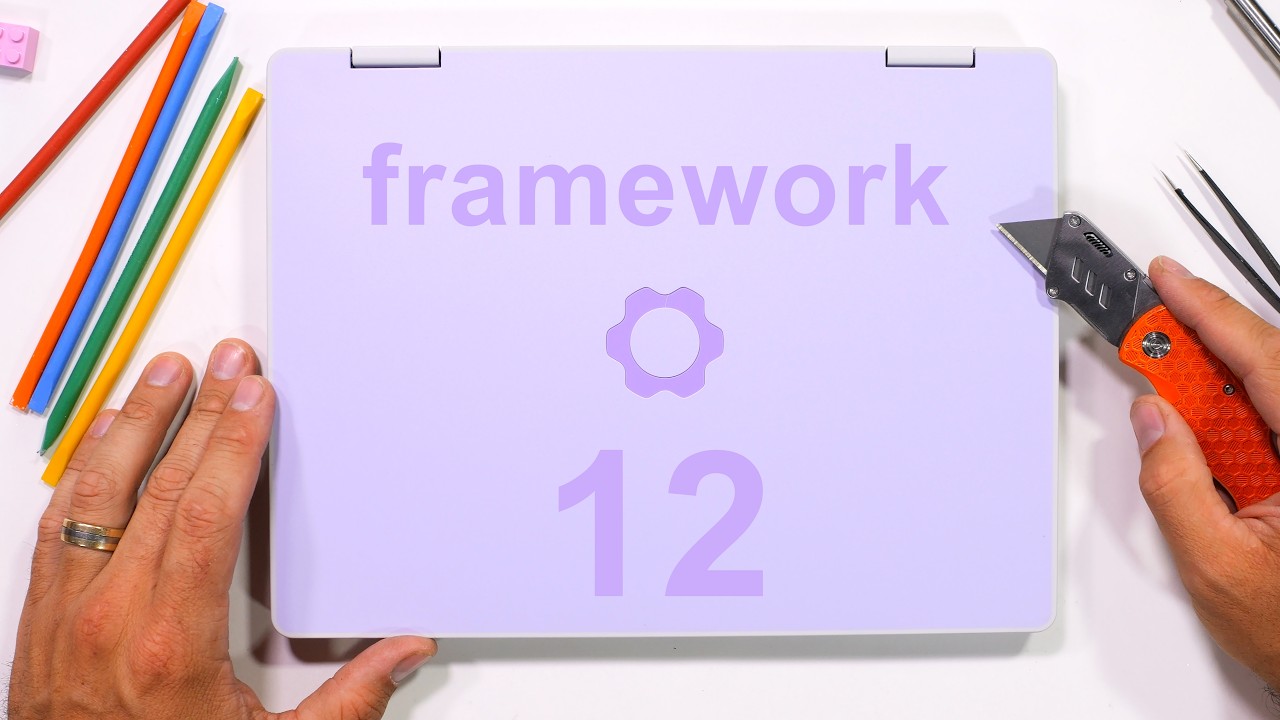

The Best Laptop on Earth... is almost too easy to fix.

- The best Prime Day speaker deals: Last chance to get up to 47 percent off on JBL, Bose, Sonos and others - Sam Chapman

- The Batman Blade Runner Episode Is A Must-See Sci-Fi Classic - Drew Dietsch

- Why Starship Troopers Failed - Drew Dietsch

- Elon Musk introduced Grok 4 last night, calling it the ‘smartest AI in the world’ — what businesses need to know - Carl Franzen

- Corporate HQs Downsize And Decentralize - Elvira Veksler

- Switch 2 user warns about accidental ban after playing preowned game cards - Jackson Chen